· T40 Associates · AI & Technology · 5 min read

The Death of Online Trust: How AI Slop Is Drowning the Internet in Fake Content

Brace yourself—by 2026, half of everything you read online could be fake, and you won't see it coming.

AI slop is flooding the internet with synthetic text, images, and fake reviews—and you’re caught in the middle. Estimates suggest up to 50% of online content could be AI-generated by 2026. Content farms now produce 1,000 articles for under $50, prioritizing volume over value. Detection tools can’t keep pace, with false positive rates hitting 30%. The consequences range from dangerous medical misinformation to election manipulation, but practical verification strategies can help you fight back.

The Rise of AI-Generated Content and Its Rapid Spread Across Platforms

AI-generated content has exploded across the internet at a pace few predicted. You’re now seeing synthetic text, images, and videos flood every major platform. Tools like ChatGPT, Midjourney, and DALL-E have made creation effortless. Anyone can produce thousands of posts in minutes.

The spread is staggering. Studies estimate that up to 50% of internet content could be AI-generated by 2026. Facebook, X, and TikTok are drowning in machine-made material. You scroll past fake images daily without realizing it.

This isn’t slowing down. Content farms use AI to generate clickbait articles at industrial scale. Fake news sites multiply overnight. You can’t trust what you read, see, or hear anymore. The verification systems platforms rely on simply can’t keep up with this volume.

How Fake Reviews and Synthetic Articles Are Poisoning Search Results

Search results have become the next battleground in this flood of synthetic content. When you search for product reviews or expert advice, you’re increasingly encountering AI-generated text designed to manipulate your decisions. Fake reviews now contaminate major platforms at alarming rates.

Here’s what you’re up against:

- Synthetic review farms that generate thousands of fake testimonials in minutes

- AI-written articles stuffed with keywords but offering zero genuine expertise

- Fabricated comparison sites that push affiliate products through manufactured credibility

You can’t trust surface-level search results anymore. The content ranking highest often isn’t the most helpful—it’s simply the most optimized. This pollution makes finding legitimate information harder every day.

You must develop stronger verification habits. Check multiple sources. Question suspiciously perfect reviews. Your skepticism is now your best defense.

The Economic Incentives Driving the AI Slop Epidemic

Money drives the AI slop epidemic, and the economics couldn’t be simpler.

You can now produce 1,000 articles for under $50 using tools like ChatGPT or Jasper. Traditional content costs $100 to $500 per piece. The math is brutal.

Content farms exploit this gap ruthlessly. They flood websites with AI-generated material, collect ad revenue, and disappear before platforms catch on. You’re seeing the results everywhere. A single operator can run dozens of fake sites simultaneously.

The incentive structure breaks down like this: 1) Low production costs, 2) High ad payouts per pageview, 3) Minimal accountability. Advertisers pay for impressions regardless of content quality. You’re effectively funding the pollution every time you click. The system rewards volume over value.

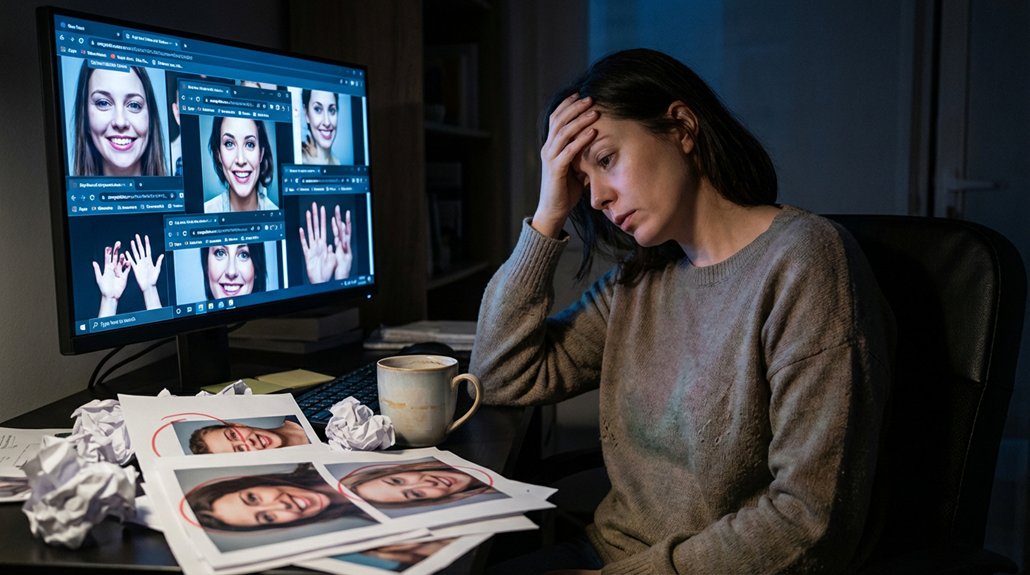

Why Detection Tools Are Failing to Keep Up With Synthetic Content

Detection tools can’t keep pace because the technology they’re fighting evolves faster than their algorithms. You’re relying on software that’s already outdated by the time it reaches your hands. AI generators receive updates weekly, while detection systems lag months behind.

The core problems you face include:

- Training data limitations: Detectors learn from yesterday’s AI outputs, not tomorrow’s improvements

- False positive rates: Current tools incorrectly flag human content up to 30% of the time

- Adversarial techniques: Generators now include built-in features specifically designed to evade detection

You can’t win this arms race with current approaches. Every time a detection tool identifies a pattern, generators adapt within weeks. The fundamental asymmetry works against you. Creating synthetic content costs pennies. Building reliable detection requires millions in research investment.

Real-World Consequences When Nobody Can Trust What They Read Online

When readers can’t distinguish authentic journalism from AI-generated fabrications, the consequences ripple through every sector of society. You face real dangers when misinformation spreads unchecked.

Healthcare suffers first. Patients read fake medical advice and make dangerous decisions about treatments. Financial markets destabilize when fabricated news triggers panic selling. Elections become battlegrounds where synthetic content manipulates voter perception.

Your professional reputation becomes vulnerable too. Fake quotes attributed to executives damage company valuations overnight. Students submit AI-generated work, eroding educational standards across institutions.

The trust deficit creates three immediate problems: 1) legitimate sources lose credibility alongside fake ones, 2) critical information gets ignored because audiences assume everything is synthetic, and 3) bad actors exploit confusion to advance harmful agendas.

You must adapt your verification habits now. The stakes demand nothing less.

Strategies for Navigating an Internet Flooded With Machine-Made Deception

Because machine-generated content now floods every platform you use, you need a systematic approach to verify what you read before you trust it.

Start with these core verification habits:

- Check the source directly. Visit official websites instead of trusting shared links or screenshots.

- Reverse image search suspicious photos. Tools like Google Lens and TinEye reveal whether images have been manipulated or stolen.

- Cross-reference claims across multiple outlets. If only one source reports something, treat it with skepticism.

You must slow down before sharing. The five-second pause can prevent you from spreading false information to your network.

Look for bylines, publication dates, and author credentials. Question content that triggers strong emotional reactions. These tactics won’t eliminate deception, but they’ll reduce your exposure considerably.

Conclusion

The internet you trusted is drowning in synthetic garbage. Every day, 90% of online content edges closer to machine-generated noise. You can’t afford to be passive anymore. Start verifying sources obsessively. Use tools like Originality.ai and cross-reference everything. Trust nothing at face value. The websites and platforms won’t save you from this flood. Only your own critical thinking stands between you and total digital deception.